Cache Memory: Your Systems Speedy Friend

Memory Matters #7

In the realm of computing, speed is the name of the game, and cache memory is a game-changer. Its ability to accelerate data retrieval and processing is unparalleled, making it an indispensable component for achieving optimal system performance. Imagine a scenario where your processor constantly has to fetch data from main memory, which is comparatively slower. This constant back-and-forth communication creates bottlenecks, leading to sluggish performance and frustrating delays.

Cache memory is a high-speed storage component that acts as a bridge between your computer's processor and main memory (DRAM). Its primary function is to store frequently accessed data (D$) and instructions (I$), thereby reducing the time required to fetch this information from the slower main memory [1]. One of the primary ways cache memory enhances system speed is by reducing the latency associated with fetching data from main memory. Main memory, while offering larger storage capacity, has relatively slower access times compared to cache memory. By keeping frequently used data closer to the processor, cache memory significantly enhances overall system responsiveness.

Cache memory is crucial for optimizing processor performance, but integrating it into mainstream computing is expensive. Adding a large cache memory significantly increase costs. There is a tradeoff between speed and capacity for these memory types. As processors become more complex and capable of advanced multitasking techniques, efficient data management and distribution become more important. Cache memory helps alleviate bottlenecks by providing a dedicated quick store for each core, ensuring quick access to data and instructions. Gaming, multimedia editing, and resource-intensive software benefit greatly from cache memory.

Fundamentals of Cache Memory

When the CPU core requests data from memory, it first checks the cache. The processor uses a portion of the memory address to identify the specific cache line where the data may be located. This portion is usually the least significant bits of the memory address, excluding the byte offset. The cache controller then compares the tag stored in that cache line (representing the rest of the memory address) with the corresponding bits of the requested address. If they match, it's a cache hit, and the data is quickly retrieved from the cache. If they don't match, it's a cache miss, and the system must fetch the data from main memory, replacing the existing content in that cache line.

CPU Cache Access

The CPU's interaction with the cache is a critical process that significantly impacts system performance. When the CPU needs to access data, it first checks the cache using three key components: the index, data, and tag [2]. The index determines which cache line to examine, the data field contains the actual information, and the tag helps verify if the cached data matches the requested memory address. This along with focused coherency protocols allow cache to be efficiently accessed in a system. The efficiency of this process relies on the principle of locality, both Temporal (recently accessed data is likely to be accessed again) and Spatial (data near recently accessed locations is likely to be needed).

Mapped Cache Schemes

Cache mapping schemes primarily fall into three categories: Direct-mapped, Fully associative, and Set-associative. A Direct-mapped cache assigns each memory block to a specific cache line, offering simplicity but potentially leading to conflicts. Fully associative caches allow any memory block to be placed in any cache line, providing flexibility at the cost of complex hardware for searching. A Set-associative cache strikes a balance between the two, dividing the cache into sets and allowing memory blocks to be placed in any line within a designated set offering a compromise between the simplicity of direct-mapped and the flexibility of fully associative caches. The choice of mapping scheme significantly impacts cache performance, affecting hit rates, access times, and hardware complexity [3].

Direct-Mapped Cache

A direct-mapped cache is a simple and efficient cache organization method. Each memory block has a predetermined location in the cache. Mapping between memory blocks and cache lines is done using a modulo operation on the memory address. This fixed mapping simplifies the cache lookup process but can lead to conflicts when multiple memory blocks map to the same cache line.

The simplicity of direct-mapped caches allows it to be fast and easy to implement in hardware. However, they can suffer from a higher miss rate compared to more complex cache organizations, especially when multiple frequently accessed memory blocks map to the same cache line. This situation, known as cache thrashing, occurs when the program repeatedly accesses different memory locations that happen to map to the same cache line, causing frequent cache misses and replacements.

Fully Associated Cache

A fully associative cache allows any block of main memory to be stored in any cache line. Unlike direct-mapped or set-associative caches, which restrict where specific memory blocks can be placed, a fully associative cache offers maximum flexibility in data placement. This flexibility comes at the cost of increased complexity in the cache controller and potentially longer search times.

A key feature of a fully associative cache is its ability to store any memory block in any available cache line. When the processor requests data, the cache controller must search all cache lines simultaneously to determine if the requested data is present. This search is typically implemented using content-addressable memory (CAM), which allows for parallel comparison of the requested memory address against all stored addresses.

When a new block of data needs to be brought into a fully associative cache and all lines are occupied, a replacement policy determines which existing cache line to evict. Common replacement policies include Least Recently Used (LRU), First-In-First-Out (FIFO), or random selection. The chosen policy aims to optimize performance by keeping the most relevant data in the cache. Fully associative caches are typically used for smaller caches or specific purposes due to their hardware complexity and power consumption. Larger caches often employ set-associative mapping techniques.

N-way Set Associative Cache

An n-way set associative cache offers a variant between the simplicity of a direct-mapped cache and the flexibility of a fully associative cache. In this organization, the cache is divided into a number of sets, each containing n cache lines (where n is the associativity). Each memory block can be placed in any of the n-lines within a specific set, determined by a mapping function (typically using the modulo operation on the memory address). This structure allows for flexibility in placement while maintaining a relatively simple lookup process.

When the CPU requests data, it first calculates which set the data belongs to using memory address bits. Once the set is identified, the cache controller simultaneously checks all n-lines within that set for a match. This is done by comparing the tag stored in each cache line with the corresponding bits of the requested memory address. If a match is found in any of the lines, it's a cache hit, and the data is quickly retrieved. If no match is found, it's a cache miss with data being fetched from main memory. A replacement policy (such as Least Recently Used or random) determines which of the n-lines to evict.

The n-way set associative cache offers a balance between performance and complexity. As n increases, the cache behaves more like a fully associative cache, potentially reducing conflict misses and improving hit rates. However, larger n values also increase the complexity of the cache controller and the time needed for tag comparison. Common values for n include 2, 4, and 8, with the choice depending on the specific requirements of the system. This cache organization is widely used in modern processors, often in multiple levels of the cache hierarchy.

Don’t Miss the Bus

Cache misses, while inevitable, can significantly impact system performance if not managed effectively. When a miss occurs, the system fetches the required data from main memory, a process that is considerably slower than cache access. To mitigate this performance hit, modern systems employ various strategies. A common approach is the use of multi-level cache hierarchies, where larger, slower caches complement smaller, faster ones. Another strategy is prefetching, where the system anticipates future data needs and loads them into the cache proactively. Cache replacement policies help optimize which data to keep in cache when new data must be loaded. The effectiveness of these strategies can significantly reduce the impact of cache misses on overall system performance.

Write Handling

Reads in compute systems take the majority of cycles however write must not be overlooked. Write operations in cache memory require careful management to maintain data coherency between the cache and main memory [5].

Two primary methods employed:

Write-through - Data is written to both the cache and main memory simultaneously ensuring immediate consistency but potentially leading to increased memory traffic and potentially slower write operations.

Write-back - Updates initially are to the cache, marking the modified cache line as 'dirty'. Main memory update is deferred until the cache line needs to be replaced. While this approach reduces memory traffic and improves write performance, it introduces complexity in maintaining data coherency.

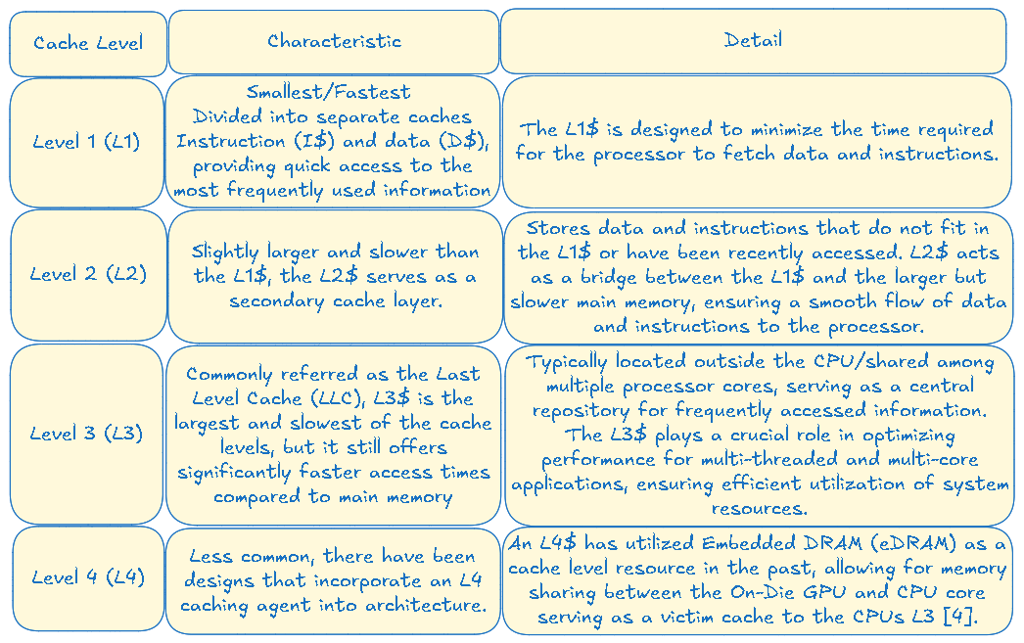

Levels of Cache Memory

Cache memory is organized into multiple levels, each with its own unique characteristics and performance capabilities. Understanding these levels is crucial for optimizing your system's performance and making informed decisions when architecting new systems.

Impact of Cache on Gaming and Multimedia

In the world of gaming and multimedia, cache memory is crucial for ensuring smooth and fast performance. It plays a vital role in providing users with the best possible experience. Gaming and multimedia applications take advantage of cache memory use to achieve outstanding performance, allowing users to fully engage in their digital experiences.

Gaming: Modern games are highly demanding, featuring complex graphics, intricate physics simulations, and rizz based virtual environments. Cache memory ensures that game data, textures, and instructions are readily available to the processor, minimizing loading times resulting in game lag reduction.

Multimedia Applications: Whether you're editing high-resolution videos, working with large image files, or creating intricate 3D models, multimedia applications heavily rely on efficient data management and processing. Cache memory serves as a high-speed buffer, allowing these applications to quickly access and manipulate large amounts of data.

Video Playback: Seamless video playback is essential for an immersive multimedia experience. Cache memory plays a crucial role in ensuring that video data is readily available to the processor, enabling smooth playback without buffering or stuttering.

Developer Practices for Optimization

There are several best software/compiler practices that can be used for cache memory optimization. Such strategies help to reduced response time, improved scalability, among other improvements [6].

Utilization of Cache-Friendly Algorithms: Software developers and programmers can optimize their code to take advantage of cache memory by implementing cache-friendly algorithms. These algorithms are designed to minimize cache misses (instances where data is not found in the cache) and maximize cache hit rates, resulting in faster data retrieval and processing.

Data Structure Alignment: Proper data alignment can significantly improve cache memory utilization. By aligning data structures to cache line boundaries, you can reduce the number of cache misses and improve overall performance.

Prefetching Techniques: Modern processors often support hardware and software prefetching techniques, proactively load data into the cache before it is needed. By utilizing these techniques, you can minimize cache misses and improve overall system performance, particularly for applications with predictable data access patterns.

Cache Performance Monitoring: Regularly monitoring and analyzing cache performance can help identify potential bottlenecks or inefficiencies. Various tools and utilities are available to measure cache hit rates, miss rates, and other performance metrics, allowing you to make informed decisions about hardware upgrades or software optimizations.

Cache-Aware Scheduling: In multi-core and multi-threaded environments, cache-aware scheduling can play a crucial role in optimizing cache utilization. By intelligently scheduling tasks and processes across available cores, you can minimize cache conflicts and maximize cache hit rates, resulting in improved overall system performance.

When using cache performance monitoring tools, look for key metrics such as Percentage of memory accesses satisfied by the cache and Average memory access time (which factors in both hit and miss latencies).

Common Hardware Cache Gotchas

Cache memory is not immune to potential issues and challenges. Understanding common cache memory problems and their respective troubleshooting techniques can help you maintain optimal system performance and address issues that may arise.

Cache Thrashing: Occurs when the cache is constantly overwritten with new data, leading to frequent misses and performance degradation. This issue can arise when working with large datasets that exceed the cache's capacity or when running multiple memory-intensive applications simultaneously. To mitigate thrashing, you can consider upgrading to a larger cache size or optimizing your application's memory usage.

Cache Coherency: In multi-core and multi-processor systems, cache coherency ensures that all cores have a consistent view of shared data. Cache coherency issues can lead to data inconsistencies, resulting in incorrect computations or system crashes.

Cache Invalidation: Cache invalidation occurs when the cache contains stale or outdated data, leading to incorrect results or system instability. This issue can arise due to software bugs, hardware failures, or improper cache management. Regular cache flushing or implementing cache invalidation techniques in your software can help mitigate this problem.

Cache Pollution: Cache pollution occurs when the cache is filled with irrelevant or unused data, reducing the available space for frequently accessed data. This can lead to increased cache misses and performance degradation. Optimizing your application's memory usage, implementing cache partitioning, or upgrading to a larger cache size can help alleviate cache pollution.

I respect the professionals that understand the interworld of low level caching fundamentals. Coherency flows alone (not touched on here) in a true multi-rack/socket/core combined with all the various patterns that modern software throws at it are extreme. This write up is by no means the all-in-all documentation on Cache memory. As engineering professionals, some (like me) still have cache books on the shelf that are stored as keep sakes toward the 10K hours that it takes for professional knowledge gathering. I cannot however conduct a writeup about how Memory Matters without talking about cache. Cache memory ensures your system operates at its peak potential, whether you're craving seamless video playback or relying on resource-intensive applications. Understanding the different levels of cache memory (L1, L2, L3) and their unique characteristics is crucial for optimizing system performance and making informed decisions when architecting new solutions. While its uncommon to be able to upgrade your cache memory, you should be aware of the CPU cache size and levels when purchasing a sigma based high powered computer. By leveraging the power of cache memory and implementing best practices such as cache-friendly algorithms, data alignment, and prefetching techniques, you can unlock the full potential of your system's capabilities.

References

[1] Patterson, D. and Hennessy, J. (2014) Computer Organization and Design: The Hardware/Software Interface. 5th Edition, Morgan Kaufmann, Burlington. [2] Handy, J (1998) The Cache Memory Book. 2nd Edition, Morgan Kaufmann, Burlington. [3] https://www.geeksforgeeks.org/cache-memory-in-computer-organization/ [4] https://superuser.com/questions/1073937/what-does-l4-cache-hold-on-some-cpus [5] 2015 course by UC Berkeley, "Computer Science 61C", available on Archive. [6] https://fastercapital.com/content/Optimal-Caching-Strategies--Unleashing-the-Replacement-Chain-Method.html

Linked to ObjectiveMind.ai