NeoClouds: The Next Revolution in Cloud Computing

Memory Matters #53

AI infrastructure spending tells a clear story. IDC reports that servers with embedded accelerators (GPUs, TPUs, and custom AI chips) now represent 70% of AI infrastructure spending in the first half of 2024, marking 178% year-over-year growth [14]. This data reveals the emergence of NeoClouds - specialized cloud computing platforms engineered specifically for AI workloads.

Analysis from Uptime Institute shows these specialized providers are disrupting established patterns through direct cost advantages. Hyperscalers can charge an average of $98 per hour for an Nvidia DGX H100 instance, while equivalent NeoCloud offerings cost just $34 - a substantial 66% saving [1]. The numbers become more compelling when examining revenue growth: uptime AI companies generated over $5 billion in Q2 alone, growing by 205% year-over-year [13].

For engineers and cloud architects working in today's competitive landscape, uptime serves as the primary differentiator. The NeoCloud movement is reshaping cloud computing through focused efficiency, operational reliability, and market responsiveness. Synergy Research Group forecasts Neocloud revenues will reach almost $180 billion by 2030, growing at an average of 69% annually [13]. This growth significantly outpaces the broader cloud market's projected CAGR of 22.9% through 2030 [14].

Lets examine what makes NeoClouds fundamentally different from traditional providers, explores how uptime has become the core competitive metric, and investigate the emerging token economics reshaping cost structures. We will also profile key players including CoreWeave, Nebeus, and FluidStack who demonstrate diverse approaches to this evolving ecosystem.

What Makes NeoClouds Different from Traditional Cloud Providers

NeoClouds represent a fundamental shift in cloud infrastructure design and delivery. Traditional hyperscalers handle everything from websites to databases, while these specialized providers focus solely on maximizing performance for artificial intelligence workloads. Three key differentiators define how these platforms are reshaping cloud computing.

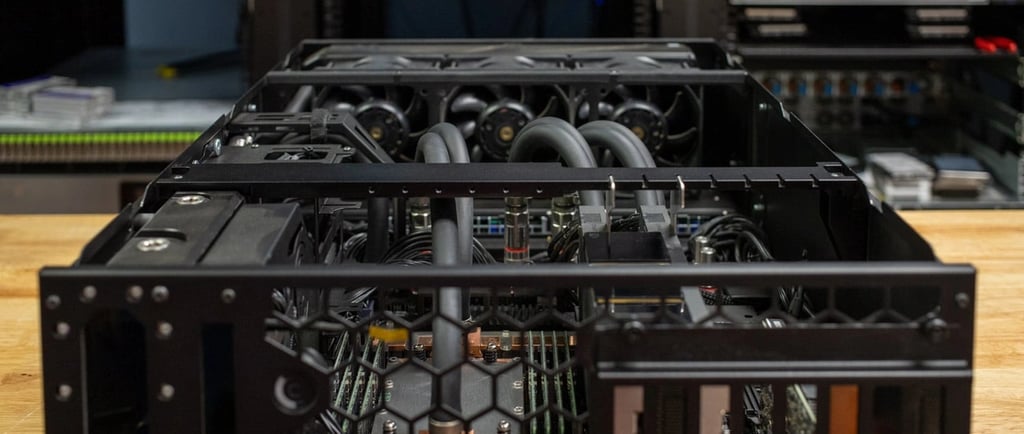

GPU-first architecture vs General-Purpose compute

AWS, Google Cloud, and Microsoft Azure operate as generalists, offering hundreds of services across diverse industries. NeoClouds prioritize artificial intelligence workloads with infrastructure specifically engineered for high-performance computing [4]. This specialized approach delivers both technical and economic advantages.

The entire architecture centers around GPU acceleration rather than CPU-based computing [2]. Organizations gain immediate access to cutting-edge GPU technology like NVIDIA H100s, A100s, and L40s without the months-long procurement waits common with hyperscalers [2]. This GPU-first design enables faster model training and more efficient AI application performance.

NeoClouds' specialized infrastructure delivers up to 66% savings compared to hyperscalers because they optimize operations specifically for AI workloads [4]. This focus maximizes uptime—the key differentiator in today's competitive cloud market.

Bare-metal access and low-latency networking

Direct hardware access sets NeoClouds apart through bare-metal or thin-VM configurations that remove virtualization overhead plaguing traditional cloud environments [4]. This approach ensures predictable throughput—particularly crucial for model-parallel workloads and high-performance inference [4].

Networking infrastructure differs dramatically from traditional providers. NVIDIA NVLink-4 technologies deliver up to 900 GB/s of intra-node bandwidth within nodes [4]. Between nodes, some NeoClouds deploy 3.2 Tbps InfiniBand fabrics—four times faster than the standard 800 Gb/s Ethernet links used by traditional providers [4]. This architecture enables seamless model parallelism, minimizing bottlenecks during large AI workload processing.

Transparent pricing and simplified billing

Cloud economics undergo radical simplification through NeoClouds. Traditional cloud providers often bury pricing models in complex documentation, creating financial anxiety for teams without dedicated cost managers [5].

NeoClouds streamline this simplification by publishing a single per-GPU hourly rate that includes networking, storage, and support [4]. Some providers offer H100 GPUs starting at modestly low costs per hour with no hidden fees [1][4]. This transparency helps organizations calculate and control costs while avoiding over-provisioning [6].

Independent analysis confirms that eliminating egress and control-plane surcharges results in 30–50% lower total cost of ownership compared to virtualized public clouds [4]. This pricing clarity enables teams to experiment freely and scale confidently—essential advantages for the uptime AI landscape.

The Role of Uptime in the NeoCloud Era

Uptime defines success in the NeoCloud ecosystem. A single minute of downtime can cost millions in lost opportunities. As NeoClouds reshape computing infrastructure, their success depends entirely on maintaining continuous service availability.

Why uptime is the new competitive edge

Uptime extends beyond technical metrics—it represents fundamental business value. Companies face competitors just one click away, making connectivity shortages unacceptable [7]. The NeoCloud space amplifies this reality. A two-hour outage for a retail giant like Target resulted in an estimated $50 million in lost revenue [8]. Uptime has become the heartbeat of customer confidence, forming the backbone of service reliability in our digitally-dependent economy [9].

How uptime impacts token economics and TCO

Total Cost of Ownership (TCO) in NeoClouds connects directly to operational reliability. The relationship follows clear patterns:

Validator uptime directly impacts staking rewards, with top performers maintaining at least 99% uptime [10]

Slashing penalties range from 0.01% to 5% of delegated tokens when validators fail to meet standards [10]

Network statistics show validators who skip block production turns earn below-average rewards despite meeting uptime metrics [11]

Understanding and managing TCO through reliable uptime helps businesses avoid fragmented decision-making that leads to higher costs and service disruptions [12].

Uptime as a trust signal in high-demand markets

Uptime serves as a powerful trust indicator throughout the customer journey. When users first visit a site or service, accessibility acts as an initial credibility test [13]. Continuous availability sends clear messages about operational reliability. Search engines prioritize websites with consistent uptime, directly affecting visibility and access to high-intent traffic [13]. Competitive industries like SaaS, finance, and NeoClouds treat uptime as the benchmark that separates trusted providers from the rest [14].

Tokenized Usage Models and Cost Efficiency

NeoCloud financial structures operate on fundamentally different economic principles than traditional cloud providers. Tokenized usage models create resource allocation efficiencies that address specific pain points in AI infrastructure economics.

Understanding token-per-GPU pricing

Token-per-GPU models eliminate the complex billing architectures that characterize traditional cloud platforms. NeoClouds offer a single, transparent per-GPU hourly rate that includes networking, storage, and support [4]. This approach removes billing uncertainty from resource planning. Current market rates show H100 GPUs starting at just $1.99-$2.49 per hour with no hidden fees [4], compared to hyperscaler averages of $98 per hour for equivalent instances [1].

Independent analysis confirms 30-50% lower total cost of ownership compared to virtualized public clouds [4]. The token model presents implementation challenges—apparent "x-dollar per GPU" pricing can obscure additional costs including high-speed networking, data transfer fees, and operational overhead [15].

Reducing idle cost through efficient uptime

Idle resource management represents a critical economic factor in AI infrastructure. H100 GPU pricing decreased from approximately $5-6 per hour to around $1 per hour within twelve months [16]. This rapid depreciation creates financial pressure when revenue drops 80% while leasing costs remain fixed [16].

Uptime optimization becomes essential for cost efficiency. Dedicated infrastructure reaches cost parity with public cloud at approximately 33% utilization [1]. NeoClouds require 66% utilization to achieve comparable economics [1]—demonstrating why operational excellence in resource utilization directly affects financial sustainability.

Feedback loops between performance and cost

Performance optimization creates measurable economic returns in properly managed NeoClouds. Organizations implementing optimized infrastructure configurations achieve 6.6% margin improvements [17]. Concurrent NeoCloud adoption generates 25-50% savings compared to hyperscaler alternatives [17].

This creates self-reinforcing cycles: improved performance increases utilization rates, which enhance cost metrics and enable additional optimization investments. These feedback mechanisms distinguish sustainable operators from short-term market participants. One industry expert observed, "The GPU itself is only a small part of the total cost—the rest lies in keeping the system efficient, secure, and operational at scale" [15].

Key Players and Operational Models in the NeoCloud Ecosystem

The NeoCloud ecosystem features several standout players whose operational approaches demonstrate how specialized GPU infrastructure shapes cloud computing's future.

CoreWeave: Scaling GPU workloads with elasticity

CoreWeave leads the market in specialized cloud infrastructure for AI applications. This company evolved from crypto mining operations and now operates 33 data centers across the US and Europe [18]. Their partnership with Nvidia provides first-to-market access to cutting-edge GPUs, including the GB200 Superchip [18].

The technical architecture delivers practical benefits. Their Kubernetes-native environment enables workloads to be operational within 5 seconds, with no charges for data ingress or egress [19]. This rapid deployment capability allows clients to scale across thousands of GPUs instantly while achieving 50-80% savings compared to legacy clouds [19].

Nebeus: Decentralized and tokenized compute

Nebeus operates as a cryptocurrency platform providing financial services including buying, selling, remitting, and financing funds in cryptocurrencies [20]. Their ecosystem enables secure access to blockchain-based financial services through smart contracts [20].

The tokenization model demonstrates economic innovation. NBTK token holders receive a 20% share of commissions generated by the platform [20]. These tokens function across multiple use cases: paying platform fees, providing loan collateral, and acting as leverage tools for cryptocurrency trading [20].

FluidStack: Distributed edge GPU capacity

FluidStack has built reputation through rapid deployment capabilities, enabling clients to deploy 2,500+ GPUs within 48 hours [21]. Their infrastructure specifications include:

Single-tenant environments by default, with fully isolated hardware, network, and storage levels [21]

Low-latency InfiniBand fabrics benchmarked to 95%+ theoretical performance [FluidStack][3]

Fast provisioning of next-generation GPUs including H100, H200, B200, and GB200 [3]

Working with NVIDIA, Borealis, and Dell, they develop exascale GPU clusters to support demanding AI workloads [22].

What separates scalable operators from short-term entrants

Funding determines which NeoClouds succeed long-term. Building GPU infrastructure requires substantial capital, with investors requiring clear business models and established client support [23]. Short-term contracts (typically 2-5 years) create mismatches with asset payback periods of 7-9 years [23].

Strategic advantages matter most. Only operators with strategic power access and real estate holdings survive long-term consolidation [24]. JLL research identifies approximately 190 distinct NeoCloud operators currently active [25]. However, operational excellence in balancing supply elasticity, cost control, and uptime guarantees determines which operators succeed in the uptime AI landscape.

Closure Report

NeoClouds represent a clear evolution in cloud computing infrastructure. Here we discuss how specialized providers are reshaping the market through GPU-first architecture, direct hardware access, and simplified pricing structures. Uptime now determines success in this competitive landscape.

The financial case is established. Organizations can reduce infrastructure costs by 66% while accessing superior performance capabilities. The projected $180 billion market by 2030 indicates lasting change rather than temporary disruption. Engineers and architects now face a direct choice: general-purpose infrastructure or specialized platforms designed for AI workloads.

Token-per-GPU pricing creates cost predictability that enables experimentation and controlled scaling. Teams can calculate expenses accurately and make informed infrastructure decisions without complex billing surprises.

Market consolidation will separate sustainable providers from temporary entrants. Success requires consistent operational performance, efficient resource management, and strategic infrastructure planning. CoreWeave, Nebeus, and FluidStack each demonstrate viable approaches when supported by solid engineering practices.

Performance and cost efficiency create reinforcing cycles. Higher uptime drives better utilization, which improves economic metrics and builds customer confidence. The competitive advantage belongs to providers who balance reliability, efficiency, and operational excellence.

Cloud computing's future will be shaped by specialized providers who recognize that uptime represents business value, not just technical performance. Organizations adopting this specialized approach gain practical advantages: faster development cycles, more predictable costs, and infrastructure that adapts to AI requirements.

The technology works today and works for you - NeoClouds offer engineering teams the tools to build efficiently while controlling costs and maintaining performance standards essential for AI development success.

Key Takeaways

NeoClouds are revolutionizing cloud computing by offering specialized GPU-first infrastructure that delivers up to 66% cost savings compared to traditional hyperscalers while maintaining superior uptime performance.

• NeoClouds offer dramatic cost advantages: Specialized providers charge $34/hour for H100 instances versus $98/hour from hyperscalers, delivering 30-50% lower total cost of ownership through transparent, token-based pricing models.

• Uptime is the new competitive battleground: In AI infrastructure, even brief downtime can cost millions in revenue, making consistent availability the ultimate differentiator that separates successful providers from short-term entrants.

• GPU-first architecture enables superior performance: Unlike general-purpose clouds, NeoClouds provide bare-metal access, low-latency networking up to 900 GB/s, and immediate access to cutting-edge GPUs without procurement delays.

• Market consolidation is inevitable: With 190+ NeoCloud operators competing and revenues projected to reach $180 billion by 2030, only providers with operational excellence and strategic infrastructure investments will survive long-term.

• Token economics create efficiency feedback loops: Better uptime drives higher utilization, which improves cost metrics and enables further optimization investments, creating a virtuous cycle that benefits both providers and customers.

The NeoCloud revolution represents a fundamental shift toward specialized infrastructure that prioritizes AI workload performance, cost transparency, and operational reliability over general-purpose computing capabilities.

References

[1] - https://www.voltagepark.com/blog/neoclouds-the-next-generation-of-ai-infrastructure

[2] - https://journal.uptimeinstitute.com/neoclouds-a-cost-effective-ai-infrastructure-alternative/

[3] - https://www.srgresearch.com/articles/neoclouds-currently-growing-by-over-200-per-year-will-reach-180-billion-in-revenues-by-2030

[4] - https://blog.equinix.com/blog/2025/10/14/what-is-a-neocloud/

[5] - https://pub.towardsai.net/the-new-cloud-war-how-ai-first-neoclouds-are-changing-everything-83a171f3f13d

[6] - https://www.nextdc.com/blog/neoclouds-vs-hyperscalers-the-rise-of-ai-first-infrastructure-and-what-it-means-for-you

[7] - https://www.zayo.com/resources/what-neoclouds-are-and-why-they-matter-to-enterprises/

[8] - https://rafftechnologies.com/blog/oefcpitm0sptevookidpa0ui

[9] - https://phoenixnap.com/blog/neocloud

[10] - https://telecomreseller.com/2020/01/20/uptime-the-competitive-edge-in-serving-customers/

[11] - https://f.hubspotusercontent40.net/hubfs/8928696/Distributed Cloud and Edge Computing for 100%25 Uptime.pdf

[12] - https://www.linkedin.com/pulse/importance-uptime-fundamental-value-douglas-day-mba-n9lhc

[13] - https://blockapps.net/blog/staking-in-crypto-how-to-effectively-select-atom-validators-for-maximum-rewards/

[14] - https://figment.io/insights/safety-over-liveness-breaking-down-the-uptime-metric-for-validator-performance/

[15] - https://www.datacenterdynamics.com/en/whitepapers/powering-outcomes-your-guide-to-optimizing-data-center-total-cost-of-ownership-tco1/

[16] - https://clickbiz.in/uptime-as-a-trust-signal-in-consumers-journey/

[17] - https://www.nationalcarcharging.com/blog/uptime-isnt-a-bonusits-the-benchmark

[18] - https://mdcs.ai/the-true-cost-of-ai-infrastructure-dont-get-fooled-by-the-x-dollar-gpu-illusion/

[19] - https://www.datacenterdynamics.com/en/analysis/chipping-away-at-the-economics-of-neoclouds/

[20] - https://www.threads.com/@sergeycyw/post/DP1U9F7CJfs/cost-efficiency-amplifies-the-story-neo-clouds-deliver-2550-savings-compared-to-

[21] - https://datacentremagazine.com/top10/top-10-neocloud-companies-transforming-global-data-centers

[22] - https://www.coreweave.com/blog/burst-compute-the-practical-and-cost-effective-way-to-scale-across-thousands-of-gpus-in-the-cloud-anytime

[23] - https://www.chipin.com/nebeus-ico-digital-bank-blockchain/

[24] - https://www.fluidstack.io/

[25] - https://www.fluidstack.io/gpu-clusters

[26] - https://www.datacenterdynamics.com/en/news/fluidstack-partners-with-nvidia-borealis-and-dell-for-exascale-gpu-clusters/

[27] - https://www.datacenterdynamics.com/en/news/neocloud-segment-sees-five-year-revenue-cagr-hit-82-jll/

[28] - https://www.cloudsyntrix.com/blogs/the-neocloud-revolution-how-specialized-ai-providers-are-reshaping-enterprise-infrastructure/

[29] - https://www.jll.com/en-us/insights/the-rise-of-neocloud-in-the-ai-landscape

Linked to ObjectiveMind.ai