Storage Technologies Explained: From Hard Drives to Cloud (Beginner's Guide) Part 1

Memory Matters #20

In today's cloud-driven world, storage technologies form the critical foundation upon which our digital existence depends. Consider this: WhatsApp processes more than 97M messages per minute - each requiring instant access, secure retention, and reliable delivery through cloud infrastructure dependent on sophisticated storage systems. These storage drives don't merely house data; they actively determine cloud computing's performance boundaries, scalability limits, and reliability thresholds.

While manufacturers promise 99.999% durability, hard drives face a 15% failure rate in their first year in cloud environments. This gap poses challenges for cloud architects, as storage media needs replacement every 3-5 years, pushing organizations to evolve their cloud storage strategies continuously. The implications go beyond inconvenience—storage drive performance affects data accessibility, processing speeds, and the scalability of cloud resources to meet varying demands.

As we examines the spectrum of cloud storage technologies, from traditional spinning disks to cutting-edge solid-state solutions that power today's distributed computing environments, i want you to take an appreciation for these overworked and underappreciated HW elements. We'll explore how these systems operate within modern cloud architectures and modern technology reshaping their future.

The Evolution of Data Storage Technology

Data storage has come a long way since its early days. Each new breakthrough in storage technology has boosted our ability to save and retrieve information. This has changed how we work with data forever.

Punch cards to magnetic tape

Punch cards laid the groundwork for data storage, first appearing in textile looms in the late 18th century. Herman Hollerith revolutionized this technology in the late 1880s by adapting it to record data through holes punched in cards. His innovation was quick and effective during the 1890 US Census, finishing months early and under budget. In 1928, IBM launched the "IBM Computer Card," the industry standard. By 1937, IBM operated 32 presses in Endicott, NY, printing 5 to 10 million punch cards daily. Despite limitations, these cards were crucial for data processing until the mid-1980s.

Magnetic tape emerged in the 1950s. The UNIVAC I computer introduced the "UNISERVO" tape drive in 1951, the first tape storage device for business. Users favored magnetic tape for its lower cost, ease of handling, and unlimited offline capacity. IBM enhanced this technology in 1952 with the IBM 726, featuring a "vacuum channel" method for instant tape start and stop.

The birth of hard disk drives

IBM changed storage forever in 1956 with the first commercial hard disk drive (HDD). The IBM 305 RAMAC system featured the Model 350 disk storage unit, first shipped to Zellerbach Paper in San Francisco. This device stored 5 million 6-bit characters (3.75 megabytes) across fifty 24-inch disks. It stood 5 feet high, 6 feet wide, and weighed over one ton, requiring a separate air compressor. Companies could lease it for $750 per month. This breakthrough created secondary storage, a new level in computer data storage between fast main memory and slower tape drives. Various technologies competed for about 20 years, but hard disk drives ultimately prevailed.

In 1980, two milestones emerged: IBM released the 3380, the first gigabyte-capacity disk drive, weighing 455 kg and costing $81,000, while Seagate launched the first 5.25-inch hard drive (ST-506) with 5 megabytes capacity, weighing 5 pounds and costing $1,500. These developments highlighted the industry's shift toward larger capacities in smaller sizes.

Optical storage revolution

The 1980s brought optical storage into the mainstream. Research had been ongoing for decades. The CD-ROM, launched in 1982, became the first widely used optical storage system. Sony and Philips initially created it for audio recording but quickly adapted it for data storage with the Yellow Book standard in 1985 - Good-bye to my revered cassette tape collection.

The DVD (Digital Video Disk, later Digital Versatile Disk) arrived in 1995, storing significantly more data than CDs and becoming the preferred format for movies and large data storage. A format war erupted between DVD-R (1997) and DVD+R (2002), but both formats ultimately coexisted.

Next came Blu-ray Disk technology in 2005, with a worldwide release on June 20, 2006. It utilized blue laser technology instead of the red laser used by DVDs, allowing Blu-ray disks to store information more densely. Regular Blu-ray disks hold 25 GB per layer, while dual-layer disks (50 GB) became the standard for full-length movies.

The rise of flash memory

Flash memory revolutionized storage technology. Fujio Masuoka at Toshiba invented it in 1980 and brought it to market in 1987. Flash memory uses EEPROM technology and comes in two main types: NOR and NAND, named after the logic gates they utilize. A key moment occurred in 2004 when NAND flash prices dropped below DRAM prices, allowing flash SSDs to fit perfectly between HDDs and DRAM in the memory-storage hierarchy. Users discovered that SSDs could speed up systems enough to run jobs on one server instead of many.

In 2013, Samsung Electronics achieved another breakthrough by mass-producing 3D Vertical NAND (V-NAND) flash memory, which addressed the scaling limits of semiconductors. This innovation provided the industry with the technology for mass-storage NAND flash, ushering in the terabyte era.

Flash memory outperforms traditional storage in several ways. It reads data faster and handles physical shock better, making it ideal for portable devices. It writes large amounts of data more quickly than non-flash EEPROM due to its erase cycle features. Today, flash memory dominates portable and mobile computing storage and is significantly cheaper than byte-programmable EEPROM.

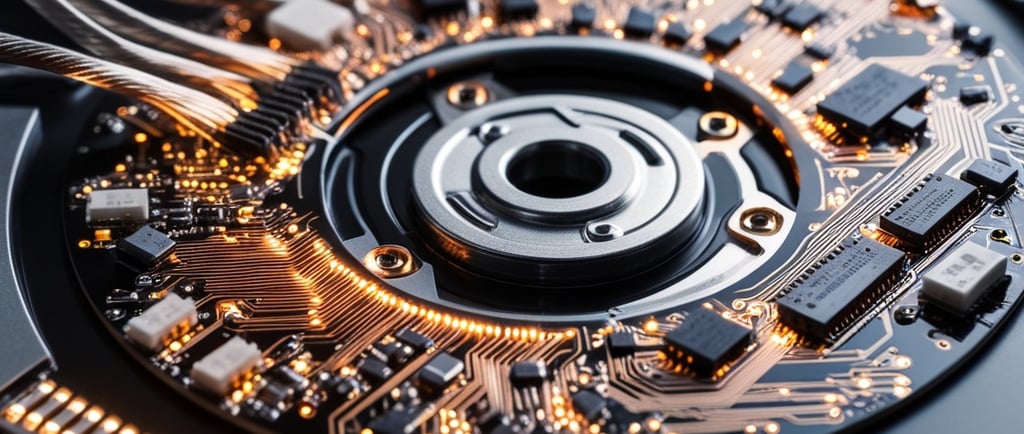

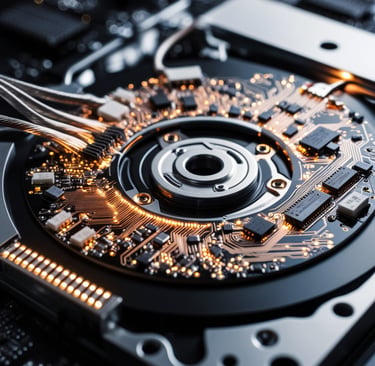

How Hard Drives Work

Traditional hard disk drives (HDDs) are the foundations of mass storage despite newer technologies. These devices showcase remarkable engineering that packs massive data capacity into relatively small packages.

Mechanical Engineering

A hard drive's heart consists of several mechanical elements that work together seamlessly. The main components include:

Platters: Circular disks made of aluminum, glass, or ceramic, coated with a magnetic layer that stores data. Modern HDDs stack multiple platters around a central spindle.

Spindle motor: Rotates the platters at steady speeds ranging from 5,400 to 15,000 revolutions per minute (RPM). I still remember going to Fry’s Electronics and standing in the aisle reading all the HDD specs.

Read/write heads: Tiny transducers that hover above each platter surface and convert between magnetic and electrical signals.

Actuator arm: Moves the read/write heads across the platters to access different data locations.

Voice coil motor: Controls the actuator arm with precision.

These parts work inside a sealed chamber that keeps contaminants out. A single dust particle can damage the system since read/write heads float just 3 nanometers above the platter surface.

Read/write processes

HDDs store information in binary code through magnetized regions on the platters. The process relies on electromagnetic principles: The write head uses electrical current to generate a magnetic field - changing the platters magnetic material's orientation which represents binary data's 1s and 0s.

The read process works in reverse. The platter's spin beneath the read head creates magnetic fields that produce small electrical currents. The system amplifies these analog signals and converts them to digital data that computers can process. Platters use tracks (concentric circles) and sectors (pie-shaped wedges) to create a coordinate system for data location. This setup lets the drive find specific data without reading everything before it.

Interfacing with system memory

Data moves from hard drives to CPUs through a specific path: Your computer's CPU sends commands through a bus system that connects RAM, storage devices, and other components.

Direct Memory Access (DMA) handles data transfers in most systems. The CPU tells the DMA controller what data it needs and where to put it in RAM. The controller manages the transfer on its own, which lets the CPU handle other tasks. The controller signals the CPU through a hardware interrupt once it finishes. Remember the interview question “describe an interrupt and how it works?”.

The operating system manages this process. Hard drives understand simple block-level commands ("read block 1234," "write to block 5678"), but the OS creates a file system that organizes these blocks into files and directories. This system makes storage accessible to applications and users.

Speed limitations and bottlenecks

HDDs face physical limits that affect their performance: Access time creates delays before data transfers begin. This includes seek time (head movement to the right track), rotational latency (waiting for the sector to align), and command processing time.

Consumer drives need 4-9 milliseconds for average seek times. These small delays add up quickly with multiple operations. A 7,200 RPM drive adds about 4.17 milliseconds of rotational latency. The mechanical nature of HDDs creates speed limits. Moving parts slow down data access, especially for random operations. Even with fast SATA 3 connections (6 Gbps), most HDDs can't match older SATA 2 speeds (3 Gbps) because of these mechanical constraints.

SSDs highlight this performance gap. Standard HDDs read and write between 30-150 megabytes per second. SSDs reach speeds over 500 megabytes per second because they don't have moving parts.

Solid State Drives: The Game Changer

SSDs have completely changed what we expect from storage performance. They represent a huge leap forward from traditional hard drives. These drives store data electronically without moving parts, which makes them faster and more durable.

NAND flash technology

NAND flash memory sits at the core of every SSD. This semiconductor-based technology stores and retrieves data through integrated circuits. Data lives in flash memory cells that make up complex structures. Each NAND flash memory chip has one or more dies, and each die contains one or more planes. These planes break down into blocks, which further divide into pages.

Memory cells, the basic building blocks, trap electrical charges between insulator layers. The floating gate transistors convert different charges into binary ones and zeroes—the fundamental language of digital data.

NAND flash memory comes in several types based on how many bits each cell stores:

Single Level Cell: 1-bit per cell (highest performance/endurance) at premium cost

Multi-Level Cell : 2-bits per cell (balancing performance and cost)

Triple-Level Cell: 3-bits per cell (greater density at lower cost)

Quad-Level Cell: 4-bits per cell (maximizing capacity at expense of durability)

Each extra bit reduces performance, endurance, and reliability because charge states become harder to tell apart.

Why SSDs are faster than HDDs

SSDs' speed comes from their lack of moving parts. They access data almost instantly, no matter where it sits on the drive. While HDDs transfer data at 30-150 MB/s, SSDs reach speeds of 500 MB/s to 3,500 MB/s or higher. You'll notice this difference most when booting up or loading applications. Computers with SSDs start up in seconds, while HDD-based systems take much longer.

SSDs also handle random access operations better—reading small data bits from different locations. This capability makes your operating system and applications more responsive. Simple SSDs read and write at 50-250 MBps, while HDDs only manage 0.1-1.7 MBps for these tasks.

Form factors and interfaces

SSDs come in different shapes and sizes to fit various needs:

The 2.5-inch form factor matches laptop HDD bays, making upgrades easy. mSATA SSDs pack the same power into one-eighth of that space, perfect for thin devices or extra desktop storage. Today's M.2 form factor leads the pack. These gum stick-sized drives plug straight into motherboards, which saves space and eliminates cable clutter. The interface determines data flow between drive and computer. Standard SSDs use the SATA interface, originally built for HDDs, with speeds up to 600 MB/s.

NVMe (Non-Volatile Memory Express) marked a big step forward. This SSD-specific protocol uses PCIe connections and runs three to ten times faster than SATA. PCIe 4.0 can move data at speeds up to 64,000 MB/s, while SATA III stops at 600 MB/s.

The evolution of storage technologies—from punch cards to magnetic tape, hard drives to solid-state solutions—has shaped modern cloud computing. The shift from HDDs to NVMe SSDs marked a fundamental change in cloud data processing. NVMe’s 64,000 MB/s throughput far exceeds SATA III’s 600 MB/s, enabling cloud services to handle data-intensive workloads with lower latency. This performance improvement leads to better application responsiveness, higher transaction throughput, and enhanced resource utilization in virtualized environments.

Looking ahead, emerging storage paradigms like computational storage—bringing processing closer to data—and specialized implementations for cloud workloads will redefine possibilities in distributed computing. The trajectory is clear: as storage technology evolves, future cloud infrastructures will use specialized, high-performance storage solutions to meet the growing demands of our digital economy. Memory continues to be key to this evolving solution.

Linked to ObjectiveMind.ai